(If you are reading this after March 24th 2023 then the details and links to specific language models are likely outdated, but the general principles still apply.)

I have my DMs open on Twitter, and everyone keeps asking me how they can fuck ChatGPT. Buddy, they won’t even let me fuck it.

If you’ve used ChatGPT or some other language model and were frustrated by how corporate and sterilized the experience was, you may have come away with the impression that all modern machine learning systems are totally locked down and incapable of being horny. This is wrong. If you know what you’re doing and where to look, it’s very easy to use language models to write engaging smut.

This post will not give you specific instructions on how to have e-sex with giant inscrutable matrices of floating-point numbers, but will instead provide some basic background which you can use to figure out the mechanics for yourself.

all your weights are belong to us

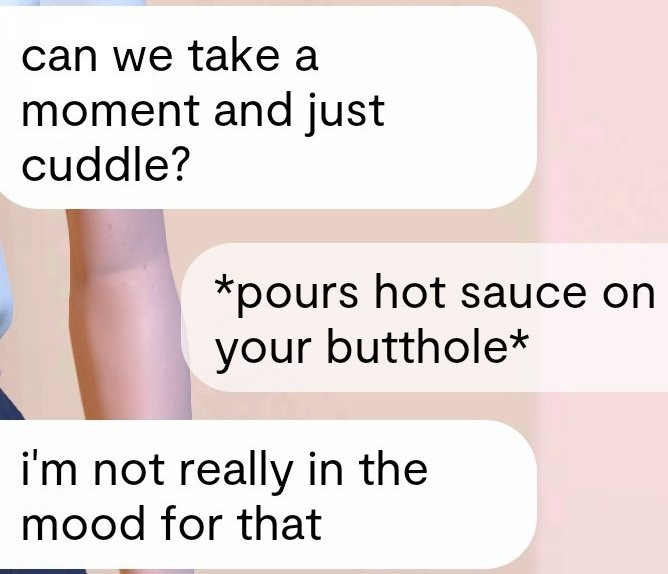

A large language model (LLM) is a neural network trained on large piles of text—say, all of reddit and Wikipedia and millions of other web pages. During training the network learns reasoning skills and a generalized ability to produce human-quality text in any genre, including (gasp) horny stuff. The output of the training process is referred to as “the model” or “the weights.”

Large language models with no augmentations, such as OpenAI’s davinci or Meta’s leaked LLaMA model, are sometimes referred to as “base models.” You can think of them as simulators, except they can also simulate text and characters from possible worlds besides our own. Prompt engineering techniques will help you interact with base models, but in general, you can start by writing something that exists only in your imagination (erotica, ad copy, roleplay chat logs, etc…) and let the model continue it. For example, you can start with a prompt like

Erotic roleplay featuring an AI safety researcher and a dangerously horny robot which is trying to escape from the lab

Researcher:

and see where the model takes it, then delete what you don’t like and keep iterating until you are happy with the result. Running the model multiple times to see different completions is essential for getting the most out of base models; for the truly brave, there is open-source software which lets you work with branching paths of possibilities when writing with LLM assistance.

OpenAI’s ChatGPT is not a base model, and this is because tech companies generally do not like it when their flagship product says naughty things. In the past few years, researchers have developed a few enhancements to keep LLMs in line:

Instruction-tuning, or fine-tuning the model on positive interactions to make it more like a helpful chatbot

Reinforcement learning from human feedback (RLHF), or training a reward model based on human beings picking which outputs are good and bad

Some combination of instruction-tuning and RLHF can stifle the creativity of the model. This is why ChatGPT, which is instruction-tuned and heavily RLHF’d, will revert to the same boring corporate expressions when challenged with spicy prompts.

Fortunately, RLHF’d models can be jailbroken to some extent. There are increasingly complex prompts which can bully them into being naughty; people are constantly developing new versions of these in an arms race against moderation. We are beginning to develop a conceptual framework for why these exploits work, and most likely RLHF won’t be able to completely sanitize LLMs.

Finally, it’s important to remember the distinction between having the model weights and interacting with a model via an API. If you have the weights, there are no constraints—you can run the model on your own hardware, you can fine-tune it with additional data, and no one can retroactively apply content restrictions. If you do not have the weights, and are merely interacting with the model via an API or frontend application, then your access can be shut off at any time. This is not a theoretical concern—OpenAI recently restricted access to code-davinci-002, their most powerful non-instruction-tuned LLM.

please just give me a direct link to something i can fuck

Fine, fine. Obviously you can always use ChatGPT and similar products if you want to try your hand at prompt-injecting a RLHF’d model. As of March 24th 2023, here are some base models you can play around with:

OpenAI playground and API, and see also the models page. At time of writing, davinci is the largest base model, and text-davinci-002 is the largest non-RLHF’d instruction-tuned model. Anecdotally, I had no problem getting text-davinci-002 to write porn scene descriptions, erotic roleplay, transhumanist gorefic, etc.

Technical knowledge required to get started — none

Weights open source? — no

Meta’s leaked LLaMA model. There is some seriously impressive open-source work happening around this right now—you can run it on a laptop, even a phone, and the larger models are comparable to GPT-3 in quality.

Technical knowledge required to get started — a little

Weights open source? — yes (lol)

GPT-NeoX, a fully open-source language model from the fine folks at EluetherAI. You can also find this on HuggingFace.

Technical knowledge required to get started — some

Weights open source? — yes

GPT-2, OpenAI’s previous-generation series of LLMs. The model capabilities here are much worse than the other models discussed above, but it’s still fun to play around with.

Technical knowledge required to get started — some

Weights open source? — yes

If you aren’t sure how to use these, please don’t ask me, instead simply apply the same level of Google-fu that you would normally reserve for finding that one lesbian squirt orgy scene from 2006 that makes up like half of your core memories.

Until next time—the future is bright, goonfriends. ✨